Authors

Isra K. Elsaadany, BS1, Hang-Ling Wu, BS2, Jessica M. Gonzalez-Vargas, PhD3, Jason Z. Moore, PhD2, Scarlett R. Miller, PhD1,3

1Department of Industrial and Manufacturing Engineering, Penn State, University Park, PA

2Department of Mechanical and Nuclear Engineering, Penn State, University Park, PA

3School of Engineering Design and Innovation, Penn State, University Park, PA

Conflict of Interest Statement

Research reported in this work was supported by Chester Ray Trout Chair in Pediatric Surgery at Penn State’s Children Hospital. The content is solely the responsibility of the authors and does not necessarily represent the official views of Chester Ray Trout Chair in Pediatric Surgery at Penn State’s Children Hospital. Coauthor Dr. Miller and Dr. Moore owns equity in Medulate, which may have a future interest in this project. Company ownership has been reviewed by the University’s Individual Conflict of Interest Committee and is currently being managed by the University.

Corresponding Author

Scarlett R. Miller, PhD, Department of Industrial and Manufacturing Engineering, School of Engineering Design and Innovation, Penn State, University Park, PA

(Email: scarlettmiller@psu.edu)

Abstract

Introduction: Pediatric laparoscopic surgery (PLS) is an important procedure; however, complications can reach up to 28%. Despite this, pediatric simulation-based training (SBT) has received little scientific attention. To advance pediatric SBT, we aimed to evaluate initial face, content, and construct validity of a new Augmented Reality (AR) simulator for PLS since it has been shown to reduce medical errors.

Methods: Four experts and eleven novice residents from Hershey Medical Center were assigned to one of four conditions and performed a peg transfer on: (a) box trainer (BT) with no feedback (NF), then pediatric trainer (PT) with NF, (b) BT with NF, then PT with feedback (F), (c) BT with F, then PT with NF, (d) BT with F, then PT with F. Face/content validity was assessed using a 5-point Likert scale (1 = not realistic/useful, 5 = very realistic/useful). Construct validity was measured using time and errors (pegs dropped).

Results: Face validity illustrated that the AR simulator was perceived realistic on all statements (3.6 ± 0.9), including realism in training basic pediatric skills like depth perception (4.0 ± 0.8). Content validity illustrated the simulator’s usefulness on all statements (3.9 ± 0.8), including as a training/testing tool (4.1 ± 0.7). Construct validity illustrated statistically significant differences in expertise for time (p = 0.002) and number of errors (p = 0.012).

Conclusions: The AR simulator demonstrated initial face, content, and construct validity for the peg transfer task. As such, it may be used to improve training in PLS with further development and validation across other laparoscopic tasks.

Introduction

Minimally invasive surgery (MIS) is a medical procedure that is performed over 10 million times annually in the United States to perform abdominal surgeries (Mattingly et al., 2022), resulting in less post-operative pain and complications (Sood et al., 2017). Over the past 20 years, there has been a significant rise in the use of MIS on children (Uecker et al., 2020). One MIS procedure where training can be improved is laparoscopic surgery (Minimally Invasive Surgery, n.d.), which is performed over 15 million times annually (Laparoscopy, n.d.) with a complication rate in pediatric laparoscopy up to 28% (Schukfeh et al., 2022). Research has shown that most serious complications during pediatric MIS are related to procedural methods and include hemorrhage, visceral or vascular injury, and gut diathermy injury (Sa et al., 2016). To train for laparoscopic surgery, one important set of technical skills required is the Fundamental of Laparoscopic Surgery (FLS) skills which include tasks such as peg transfer, knot-tying and suturing (SAGES FLS committee, 2019). FLS was developed to assess the core knowledge and skills needed by surgeons to conduct basic laparoscopic surgery procedures (Zheng et al., 2009). As a result, research has shown that being proficient in FLS skills improves performance in the operating room (Sroka et al., 2009). One example is the peg transfer task, which develops depth perception (Kolozsvari et al., 2011) and can negatively impact laparoscopic performance if not mastered (Suleman et al., 2010).

Simulation-based training (SBT) has proven to be effective in transferring skills to surgical settings (Dawe et al., 2013), enhancing patient safety in laparoscopic surgery (Gause et al., 2016; Vanderbilt et al., 2014), and is an effective teaching method in pediatric surgical education (Lopreiato & Sawyer, 2015). However, for simulators to be successfully integrated into training programs, they must first be validated to ensure they are effectively teaching and training the required skills. Specifically, simulators need to demonstrate three types of validation: face, content and construct validity (Leijte et al., 2019). Face validity pertains to how realistic the simulator is and whether it depicts what it is intended to depict (McDougall, 2007). Content validity involves assessing the suitability and usefulness of the simulator as a teaching method (Hung et al., 2011; McDougall, 2007). Finally, construct validity is the ability of a simulator to differentiate between experts and novice surgeons (McDougall, 2007). This helps ensure the realism and usefulness of simulators to be illustrative of skills required in a real-life surgical setting (Alsalamah et al., 2017) and its use as an evaluation tool (Gallagher et al., 2003). Specifically, validated laparoscopic simulators have been shown to have potential as effective and prominent training tools (Mori et al., 2022; Toale et al., 2022). However, simulators in pediatric surgery have received relatively few papers and scientific attention, which shows further research is needed in this field (Azzie at al., 2011; Najmaldin, 2007). Specifically, advanced skills needed for pediatric surgery cannot be taught using regular surgery simulation training (Georgeson & Owings, 2000). This highlights a need to design simulators to teach and overcome the difficulties associated with pediatric laparoscopic skills (Hamilton et al., 2011). One form of SBT used in laparoscopic simulation training is augmented reality (AR) simulators (Botden & Jakimowicz, 2008). AR simulators can provide haptic feedback (Botden & Jakimowicz, 2008) and enhance trainee's skills transfer to the clinical environment (Aggarwal et al., 2004; Van Sickle et al., 2005). A literature review conducted by Zhu et al. (2014) found that 96% of the papers reviewed illustrated that AR results in less training required, lower failure rates, improved performance, and a shorter learning curve.

To improve current training in pediatric laparoscopic surgery and capitalize on the effectiveness of AR simulators, a new augmented reality (AR) simulator was developed that integrates real-time feedback. However, the validity of this AR simulator has yet to be explored. Therefore, the goal of this study was to determine initial face, content, and construct validity of a new AR simulator for the FLS peg transfer task in pediatric and regular laparoscopic training.

Methods

Participants

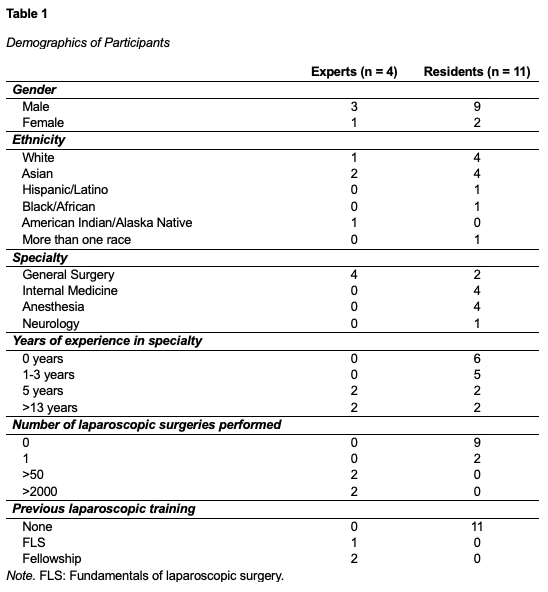

Four experts and eleven novice medical residents from Hershey Medical Center were recruited. Residents were novices with no experience in laparoscopic or with less than 50 laparoscopic surgeries performed, and experts had performed more than 50 laparoscopic surgeries (Buzink et al., 2009). The demographics of the participants are shown in Table 1.

Equipment

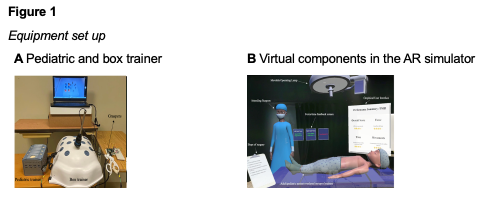

A 3-D printed pediatric FSL laparoscopic trainer was developed with dimensions 230 mm x 140 mm x 126 mm. A commercially available Medicinology & Co standard box trainer with dimensions 455 mm x 395 mm x 220 mm was used for regular laparoscopic training. To provide real-time force and time feedback, an AR simulator using Microsoft HoloLens was developed. The AR simulator included a virtual patient, surgeon, clipboards with training instructions, operating lamp, heart rate monitor, and simulated post-training feedback. Figure 1 shows a visual representation of the equipment. The Tobii Pro 3 eye trackers were also used to track participants eye gaze data during the task.

Task and Study Conditions

In this study, participants had to complete the peg transfer task which involves 6 rubber pegs and a peg board. Participants were required to use laparoscopic graspers to grab each peg with their non-dominant hand, transfer it mid-air to their dominant hand, and then place it on the opposite side of the pegboard.

The peg transfer task was performed under one of four training conditions: (1) Regular Trainerno feedback then on Pediatric Trainerno feedback, (2) Regular Trainerno feedback then on Pediatric Trainerfeedback, (3) Regular Trainerfeedback then Pediatric Trainerno feedback, or (4) Regular Trainerfeedback, then Pediatric Trainerfeedback. The AR simulator was used to provide feedback.

Procedure

First, procedures were explained and informed consent was obtained. Participants then received $15 compensation. Participants completed surveys on prior FLS experience, pre-self-efficacy, NASA Task Load Index (TLX) and mental workload. Next, experts were assigned to one of the four conditions, and residents were systematically distributed between conditions. In all conditions, participants used eye trackers. For the conditions with feedback, participants used the HoloLens on top of the eye trackers and watched a 32-second instructional video about the AR simulator. The eye trackers and HoloLens were calibrated for each participant. Then, the peg transfer task was explained, and participants began performing the task. After completing the peg transfer task on each trainer, all participants completed a post self-efficacy, NASA TLX, workload and face and content validity questionnaires.

Outcome Measures

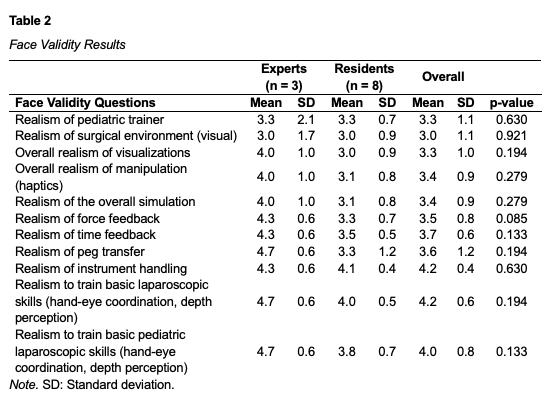

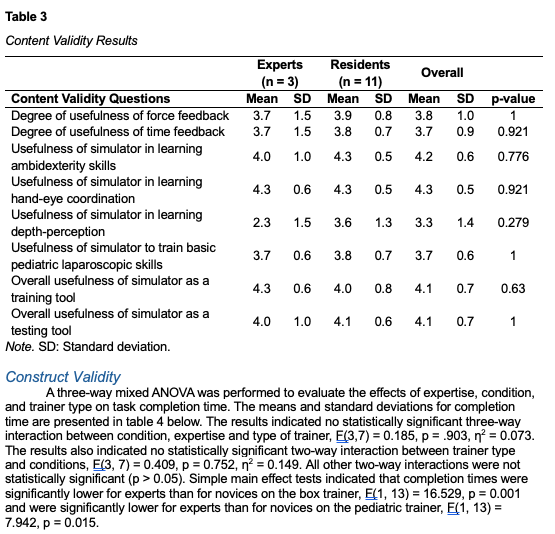

The main outcomes studied were face, content, and construct validity. Face validity was assessed using a 11-item questionnaire on a 5-point Likert scale, ranging from 1 (not at all realistic) to 5 (extremely realistic). Content validity was determined using an 8-item questionnaire on a 5-point Likert scale questionnaire, ranging from 1 (not at all useful) to 5 (extremely useful). Both the face validity (Arikatla et al., 2012; Sankaranarayanan et al., 2010) and content validity (Escamirosa et al., 2014; Schreuder et al., 2009) questionnaires were adapted from previous studies. Only survey responses from participants who received feedback on the AR pediatric trainer were used for this analysis, resulting in 3 experts and 8 residents.

Construct validity was assessed by measuring the time and number of errors during the peg transfer task. For each trainer, time to complete the peg transfer was recorded in seconds from the picking up the first peg to transferring the last peg (SAGES FLS committee, 2019). Errors were also quantified as the number of times pegs were dropped during the task (Rhee et al., 2014).

Results

Face Validity

The Mann Whitney results showed no statistically significant difference in the response scores between experts and novices (p > 0.05) for each question, see Table 2. Data is reported as mean ± standard deviation (SD). Results also showed that all 11 questions were rated above the median (3.0). The highest rated statements were related to realism to train basic laparoscopic skills like hand-eye coordination and depth perception (4.2 ± 0.6), instrument handling (4.2 ± 0.4) and realism to train basic pediatric laparoscopic skills (4.0 ±0.8). The lowest rated statements were related to realism of the surgical environment (3.0 ± 1.1) and overall realism of visualizations (3.3 ± 1).

Content Validity

The Mann Whitney results showed no statistically significant difference in response scores between experts and novices (p > 0.05) for each question, see Table 3. Results also showed that all 8 questions were rated above the median (3.0). The highest rated statements were related to the usefulness of simulator in learning hand-eye coordination (4.3 ± 0.5), in learning ambidexterity skills (4.2 ± 0.6) and as a training tool and testing tool (4.1 ± 0.7). The lowest rated statements were related to the usefulness of simulator in learning depth-perception (3.3 ± 1.4) and time feedback (3.7 ± 0.9). All survey responses in the first three conditions who utilized the AR simulator were used.

Construct Validity

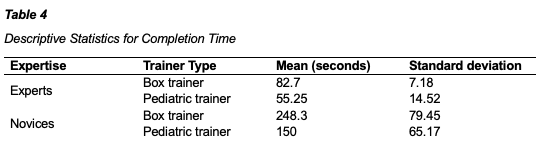

A three-way mixed ANOVA was performed to evaluate the effects of expertise, condition, and trainer type on task completion time. The means and standard deviations for completion time are presented in table 4 below. The results indicated no statistically significant three-way interaction between condition, expertise and type of trainer, F(3,7) = 0.185, p = .903, η2 = 0.073. The results also indicated no statistically significant two-way interaction between trainer type and conditions, F(3, 7) = 0.409, p = 0.752, η2 = 0.149. All other two-way interactions were not statistically significant (p > 0.05). Simple main effect tests indicated that completion times were significantly lower for experts than for novices on the box trainer, F(1, 13) = 16.529, p = 0.001 and were significantly lower for experts than for novices on the pediatric trainer, F(1, 13) = 7.942, p = 0.015.

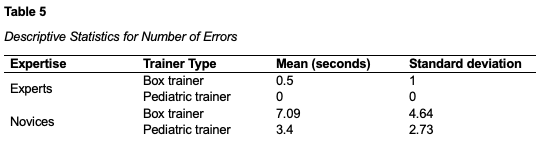

Another three-way mixed ANOVA was performed to evaluate the effects of expertise, condition, and trainer type on number of errors. The means and standard deviations for completion time are presented in table 5 below. The results indicated no statistically significant three-way interaction between condition, expertise and type of trainer, F(3,7) = 0.167, p = 0.916, partial η2 = 0.067. The results also indicated no statistically significant two-way interaction between trainer type and conditions, F(3, 7) = 0.133, p = 0.937, η2 = 0.054. All other two-way interactions were not statistically significant (p > 0.05). Simple main effect tests indicated that number of errors were statistically significant lower for experts than for novices on the box trainer, F(1, 13) = 16.529, p = 0.016, and were significantly lower for experts than novices on the pediatric trainer, F(1, 13) = 5.788, p = 0.032.

Discussion

SBT can significantly enhance patient safety in laparoscopic surgery (Gause et al., 2016; Vanderbilt et al., 2014). To improve training in pediatric MIS, a new AR simulator was developed. This study aimed to determine initial face, content, and construct validity of the AR simulator. Results indicated no significant difference between experts' and novices' opinions on the face and content validity questionnaire, suggesting both groups found the AR simulator as realistic and useful. This consensus is important because experts and residents have different perceptions regarding their learning needs, so resident’s perceptions should also be included when identifying needs in their training (Pugh et al., 2007).

All 11 statements on the face validity questionnaire were rated above the median (3.0), including the realism of the pediatric trainer and AR visualizations, which signifies the achievement of successful face validation for surgical simulators (Aritkatla et al., 2012; Dorozhkin et al., 2016). These results can indicate that the AR simulator achieved successful initial face validation and was perceived a realistic training tool in its initial stages. This is important because face validation ensures the simulator's potential utility and successful integration (Raje at al., 2016; Wentink, 2001). Similarly, all eight statements on the content validity questionnaire were rated above the median which can also be considered successful content validation (Dorozhkin et al., 2016). Both groups found the simulator useful for training hand-eye coordination, depth perception, force and time feedback, ambidexterity skills, and as a training and testing tool. Effective hand-eye coordination and depth perception are critical for laparoscopic surgery to avoid performance errors and ensure patient safety (Raje at al., 2016; Wentink, 2001). These results suggest the simulator's potential as a useful training tool since content validity can be illustrative of skills required in a real-life surgical setting (Alsalamah et al., 2017).

Results also showed that there were significant differences in time and errors between experts and novices on the pediatric and box trainers with and without the AR simulator, which demonstrates initial construct validity of the simulators. This ability to distinguish skill levels is essential for integrating simulators into medical education (McDougall, 2007). Such simulators can be used to evaluate and credential surgeons objectively (Duffy et al., 2004; Schout et al., 2009). Using objective approaches to assess surgical trainees has been more in demand to enhance and increase the effectiveness of skill transfer (Ahmed et al., 2013) and is crucial in ensuring patient safety in the operating room (Shaharan & Neary, 2014).

Overall, the AR simulator shows promise as a valuable training tool that could improve pediatric MIS training and potentially reduce operating room errors with further development and validation (Arikatla et al., 2012). However, a limitation of this study is its small sample size. Additionally, participants completed only half the peg transfer task on each trainer due to time constraints. Future work can recruit a larger population of participants that perform the complete peg transfer task to further validate the simulator and ensure validity.

Conclusion

The goal of this study was to determine initial face, content, and construct validity of a new AR simulator to contribute to the field of pediatric SBT. Surgical simulators must be validated before integrating them into medical programs. The results of our study showed that the AR simulator with the pediatric trainer was perceived to be a realistic and a useful training tool for learning skills such as hand-eye coordination and learning ambidexterity skills. In addition, it was able to differentiate between experts and novices on the peg transfer task. As such, the AR simulator may potentially work towards enhancing training in pediatric laparoscopic surgery. Future work will involve improving the current simulator and validating it across other laparoscopic training tasks.

References

Aggarwal, R., Moorthy, K., & Darzi, A. (2004). Laparoscopic skills training and assessment. British Journal of Surgery, 91(12), 1549–1558. https://doi.org/10.1002/bjs.4816

Ahmed, A., Ishman, S. L., Laeeq, K., & Bhatti, N. I. (2013). Assessment of improvement of trainee surgical skills in the operating room for tonsillectomy. The Laryngoscope, 123(7), 1639–1644. https://doi.org/10.1002/lary.24023

Alsalamah, A., Campo, R., Tanos, V., Grimbizis, G., Van Belle, Y., Hood, K., Pugh, N., & Amso, N. (2017). Face and content validity of the virtual reality simulator ‘ScanTrainer®.’ Gynecological Surgery, 14(1). https://doi.org/10.1186/s10397-017-1020-6

Arikatla, V. S., Sankaranarayanan, G., Ahn, W., Chellali, A., De, S., Caroline, G. L., Hwabejire, J., DeMoya, M., Schwaitzberg, S., & Jones, D. B. (2012). Face and construct validation of a virtual peg transfer simulator. Surgical Endoscopy, 27(5), 1721–1729. https://doi.org/10.1007/s00464-012-2664-y

Azzie, G., Gerstle, J. T., Nasr, A., Lasko, D., Green, J., Henao, O., Farcas, M., & Okrainec, A. (2011). Development and validation of a pediatric laparoscopic surgery simulator. Journal of Pediatric Surgery, 46(5), 897–903. https://doi.org/10.1016/j.jpedsurg.2011.02.026

Botden, S. M. B. I., & Jakimowicz, J. J. (2008). What is going on in augmented reality simulation in laparoscopic surgery? Surgical Endoscopy, 23(8), 1693–1700. https://doi.org/10.1007/s00464-008-0144-1

Buzink, S. N., Botden, S. M. B. I., Heemskerk, J., Goossens, R. H. M., De Ridder, H., & Jakimowicz, J. J. (2008). Camera navigation and tissue manipulation; are these laparoscopic skills related? Surgical Endoscopy, 23(4), 750–757. https://doi.org/10.1007/s00464-008-0057-z

Dawe, S. R., Windsor, J. A., Broeders, J. A., Cregan, P. C., Hewett, P. J., & Maddern, G. J. (2013). A Systematic Review of Surgical Skills Transfer After Simulation-Based Training. Annals of Surgery, 259(2), 236–248. https://doi.org/10.1097/sla.0000000000000245

Dorozhkin, D., Nemani, A., Roberts, K., Ahn, W., Halic, T., Dargar, S., Wang, J., Cao, C. G. L., Sankaranarayanan, G., & De, S. (2016). Face and content validation of a Virtual Translumenal Endoscopic Surgery Trainer (VTESTTM). Surgical Endoscopy, 30(12), 5529–5536. https://doi.org/10.1007/s00464-016-4917-7

Duffy, A. J., Hogle, N. J., McCarthy, H., Lew, J. I., Egan, A., Christos, P., & Fowler, D. L. (2004). Construct validity for the LAPSIM laparoscopic surgical simulator. Surgical Endoscopy, 19(3), 401–405. https://doi.org/10.1007/s00464-004-8202-9

Escamirosa, F. P., Flores, R. M. O., García, I. O., Vidal, C. R. Z., & Martínez, A. M. (2014). Face, content, and construct validity of the EndoViS training system for objective assessment of psychomotor skills of laparoscopic surgeons. Surgical Endoscopy, 29(11), 3392–3403. https://doi.org/10.1007/s00464-014-4032-6

Gallagher, A. G., Ritter, E. M., & Satava, R. M. (2003). Fundamental principles of validation, and reliability: rigorous science for the assessment of surgical education and training. Surgical Endoscopy, 17(10), 1525–1529. https://doi.org/10.1007/s00464-003-0035-4

Gause, C. D., Hsiung, G., Schwab, B., Clifton, M., Harmon, C. M., & Barsness, K. A. (2016). Advances in Pediatric Surgical Education: A critical appraisal of two consecutive minimally invasive pediatric surgery training courses. Journal of Laparoendoscopic & Advanced Surgical Techniques, 26(8), 663–670. https://doi.org/10.1089/lap.2016.0249

Georgeson, K. E., & Owings, E. (2000). Advances in minimally invasive surgery in children. The American Journal of Surgery, 180(5), 362–364. https://doi.org/10.1016/s0002-9610(00)00554-7

Hamilton, J. M., Kahol, K., Vankipuram, M., Ashby, A., Notrica, D. M., & Ferrara, J. J. (2011). Toward effective pediatric minimally invasive surgical simulation. Journal of Pediatric Surgery, 46(1), 138–144. https://doi.org/10.1016/j.jpedsurg.2010.09.078

Hung, A. J., Zehnder, P., Patil, M. B., Cai, J., Ng, C. K., Aron, M., Gill, I. S., & Desai, M. M. (2011). Face, content and construct validity of a novel robotic surgery simulator. The Journal of Urology, 186(3), 1019–1025. https://doi.org/10.1016/j.juro.2011.04.064

Kolozsvari, N. O., Kaneva, P., Brace, C., Chartrand, G., Vaillancourt, M., Cao, J., Banaszek, D., Demyttenaere, S., Vassiliou, M. C., Fried, G. M., & Feldman, L. S. (2011). Mastery versus the standard proficiency target for basic laparoscopic skill training: effect on skill transfer and retention. Surgical Endoscopy, 25(7), 2063–2070. https://doi.org/10.1007/s00464-011-1743-9

Laparoscopy. (n.d.). North Kansas City Hospital. Retrieved May 7, 2025, from https://www.nkch.org/find-a-service/surgery/minimally-invasive-procedures/laparoscopy

Leijte, E., Arts, E., Witteman, B., Jakimowicz, J., De Blaauw, I., & Botden, S. (2019). Construct, content and face validity of the eoSim laparoscopic simulator on advanced suturing tasks. Surgical Endoscopy, 33(11), 3635–3643. https://doi.org/10.1007/s00464-018-06652-3

Lopreiato, J. O., & Sawyer, T. (2015). Simulation-Based medical education in Pediatrics. Academic Pediatrics, 15(2), 134–142. https://doi.org/10.1016/j.acap.2014.10.010

Mattingly, A. S., Chen, M. M., Divi, V., Holsinger, F. C., & Saraswathula, A. (2022). Minimally Invasive Surgery in the United States, 2022: Understanding its value using new datasets. Journal of Surgical Research, 281, 33–36. https://doi.org/10.1016/j.jss.2022.08.006

McDougall, E. M. (2007). Validation of surgical simulators. Journal of Endourology, 21(3), 244–247. https://doi.org/10.1089/end.2007.9985

Minimally invasive surgery. (n.d.). Boston Children’s Hospital. Retrieved May 7, 2025, from https://www.childrenshospital.org/treatments/minimally-invasive-surgery

Mori, T., Ikeda, K., Takeshita, N., Teramura, K., & Ito, M. (2022). Validation of a novel virtual reality simulation system with the focus on training for surgical dissection during laparoscopic sigmoid colectomy. BMC Surgery, 22(1). https://doi.org/10.1186/s12893-021-01441-7

Najmaldin, A. (2007). Skills training in pediatric minimal access surgery. Journal of Pediatric Surgery, 42(2), 284–289. https://doi.org/10.1016/j.jpedsurg.2006.10.033

Pugh, C. M., DaRosa, D. A., Glenn, D., & Bell, R. H. (2007). A comparison of faculty and resident perception of resident learning needs in the operating room. Journal of Surgical Education, 64(5), 250–255. https://doi.org/10.1016/j.jsurg.2007.07.007

Raje, S., Sinha, R., & Rao, G. (2016). Three-dimensional laparoscopy: Principles and practice. Journal of Minimal Access Surgery, 13(3), 165. https://doi.org/10.4103/0972-9941.181761

Rhee, R., Fernandez, G., Bush, R., & Seymour, N. E. (2014). The effects of viewing axis on laparoscopic performance: a comparison of non-expert and expert laparoscopic surgeons. Surgical Endoscopy, 28(9), 2634–2640. https://doi.org/10.1007/s00464-014-3515-9

Sa, W., Gn, M., Na, B., Aa, B., S, K., & Fh, A. (2016). Our experience of laparoscopic surgery in children during the learning curve. Medical Reports & Case Studies, 01(01). https://doi.org/10.4172/2572-5130.1000102

SAGES FLS committee. (2019). Technical Skills Proficiency-Based Training Curriculum. In Fundamentals of Laparoscopic Surgery. https://www.flsprogram.org/wp-content/uploads/2014/02/Proficiency-Based-Curriculum-updated-May-2019-v24-.pdf

Sankaranarayanan, G., Lin, H., Arikatla, V. S., Mulcare, M., Zhang, L., Derevianko, A., Lim, R., Fobert, D., Cao, C., Schwaitzberg, S. D., Jones, D. B., & De, S. (2010). Preliminary face and construct validation study of a virtual basic laparoscopic skill trainer. Journal of Laparoendoscopic & Advanced Surgical Techniques, 20(2), 153–157. https://doi.org/10.1089/lap.2009.0030

Schout, B. M. A., Hendrikx, A. J. M., Scheele, F., Bemelmans, B. L. H., & Scherpbier, A. J. J. A. (2009). Validation and implementation of surgical simulators: a critical review of present, past, and future. Surgical Endoscopy, 24(3), 536–546. https://doi.org/10.1007/s00464-009-0634-9

Schreuder, H. W., Van Dongen, K. W., Roeleveld, S. J., Schijven, M. P., & Broeders, I. A. (2009). Face and construct validity of virtual reality simulation of laparoscopic gynecologic surgery. American Journal of Obstetrics and Gynecology, 200(5), 540.e1-540.e8. https://doi.org/10.1016/j.ajog.2008.12.030

Schukfeh, N., Abo-Namous, R., Madadi-Sanjani, O., Uecker, M., Petersen, C., Ure, B. M., & Kuebler, J. F. (2022). The role of laparoscopic treatment of choledochal malformation in Europe: A Single-Center Experience and Review of the literature. European Journal of Pediatric Surgery, 32(06), 521–528. https://doi.org/10.1055/s-0042-1749435

Shaharan, S., & Neary, P. (2014). Evaluation of surgical training in the era of simulation. World Journal of Gastrointestinal Endoscopy, 6(9), 436. https://doi.org/10.4253/wjge.v6.i9.436

Sood, A., Meyer, C. P., Abdollah, F., Sammon, J. D., Sun, M., Lipsitz, S. R., Hollis, M., Weissman, J. S., Menon, M., & Trinh, Q. (2017). Minimally invasive surgery and its impact on 30-day postoperative complications, unplanned readmissions and mortality. British Journal of Surgery, 104(10), 1372–1381. https://doi.org/10.1002/bjs.10561

Sroka, G., Feldman, L. S., Vassiliou, M. C., Kaneva, P. A., Fayez, R., & Fried, G. M. (2009). Fundamentals of Laparoscopic Surgery simulator training to proficiency improves laparoscopic performance in the operating room—a randomized controlled trial. The American Journal of Surgery, 199(1), 115–120. https://doi.org/10.1016/j.amjsurg.2009.07.035

Suleman, R., Yang, T., Paige, J., Chauvin, S., Alleyn, J., Brewer, M., Johnson, S. I., & Hoxsey, R. J. (2010). Hand-Eye dominance and depth perception effects in performance on a basic laparoscopic skills set. JSLS Journal of the Society of Laparoscopic & Robotic Surgeons, 14(1), 35–40. https://doi.org/10.4293/108680810x12674612014428

Toale, C., Morris, M., & Kavanagh, D. O. (2022). Training and assessment using the LapSim laparoscopic simulator: a scoping review of validity evidence. Surgical Endoscopy, 37(3), 1658–1671. https://doi.org/10.1007/s00464-022-09593-0

Uecker, M., Kuebler, J. F., Ure, B. M., & Schukfeh, N. (2020). Minimally Invasive Pediatric Surgery: the learning curve. European Journal of Pediatric Surgery, 30(02), 172–180. https://doi.org/10.1055/s-0040-1703011

Van Sickle, K. R., McClusky, D. A., III, Gallagher, A. G., & Smith, C. D. (2005). Construct validation of the ProMIS simulator using a novel laparoscopic suturing task. Surgical Endoscopy, 19(9), 1227–1231. https://doi.org/10.1007/s00464-004-8274-6

Vanderbilt, A. A., Grover, A. C., Pastis, N. J., Feldman, M., Granados, D. D., Murithi, L. K., & Mainous, A. G., III. (2014). Randomized Controlled Trials: A Systematic review of Laparoscopic Surgery and Simulation-Based Training. Global Journal of Health Science, 7(2). https://doi.org/10.5539/gjhs.v7n2p310

Wentink, B. (2001). Eye-hand coordination in laparoscopy - an overview of experiments and supporting aids. Minimally Invasive Therapy & Allied Technologies, 10(3), 155–162. https://doi.org/10.1080/136457001753192277

Zheng, B., Hur, H., Johnson, S., & Swanström, L. L. (2009). Validity of using Fundamentals of Laparoscopic Surgery (FLS) program to assess laparoscopic competence for gynecologists. Surgical Endoscopy, 24(1), 152–160. https://doi.org/10.1007/s00464-009-0539-7

Zhu, E., Hadadgar, A., Masiello, I., & Zary, N. (2014). Augmented reality in healthcare education: an integrative review. PeerJ, 2, e469. https://doi.org/10.7717/peerj.469